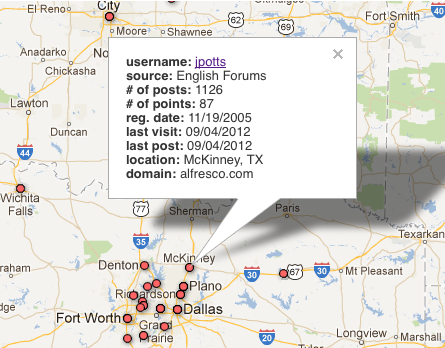

We had a need to see Alfresco forum users by geography. Google Fusion Tables provides the capability to see any geographic location stored in one or more columns on a map. We had successfully used this before for smaller batches of mostly static data, so I decided to see if it would work well for our forum data. This blog post is about what I did, including some useful tips for working with the Google Fusion Table API.

Determining the Location

First, I needed a city and country for each forum user. In our forums, users can declare their location, but not everyone does. So I wrote a little Python script that uses the MaxMind GeoLite database to determine a location for each user based on IP address. The script then compares the IP-determined location with the user’s declared location, and if they are different, it asks the person running the script to choose which one is likely to be more accurate. For example, the IP address based lookup might come back with “Suriname” but the user’s declared location is “Paramaribo, Suriname”, so you’d choose the latter. The script saves each decision so that it doesn’t have to ask again for the same comparison on this run or subsequent runs.

Loading the Data into Google Fusion Tables with Python

Once I had a city and country for each forum user I had to get those loaded into a Google Fusion Table. I found this Python-based Fusion Tables client and it worked quite nicely.

Here are a few tips that might save you some time when you are working with Google Fusion Tables, regardless of the client-side language…

Don’t Update–Drop, then Add

I started by trying to be smart about updating existing records rather than inserting new ones. But this meant that for each row, I had to do a query to test for the existence of a match and then do an update. This was incredibly slow, especially because you can’t do bulk updates (see next point).

So every time I run an update, the script first clears out the table. That means I load the entire dataset every time there is an update, but that is much faster than the update-if-present-otherwise-insert approach.

Batch Your Queries

The Google Fusion Tables API supports bulk operations. You can execute up to 500 at-a-time, if I recall correctly. This is a huge time-saver. My script just adds the insert statements to a list, and when it gets 500 (or runs out of inserts) it joins the list on “;” and then executes the batch with a single call to the Fusion Tables API.

The one drawback, as mentioned in the previous point is that it does not support bulk updates–only inserts are supported. But with the performance gain of bulk operations, I don’t mind clearing out the table and re-inserting.

Throttle Your Requests

If the script exceeds 30 requests per minute it is highly likely you will get rate-limited. So it is important to throttle your requests. I found that a 2.5 second wait between queries was fine and because the queries are batched 500 at-a-time, it really isn’t a big deal to wait.

Geocoding Takes Time

So the whole thing is pretty slick but there is a small pain. Because all rows get dropped every time I load the table, every row has to be geocoded and that takes time. I believe there is an API call to ask the table to be geocoded but I haven’t found that to work reliably. Instead, I have to go to the table in my browser and tell Fusion Tables to geocode the table. This takes a LONG time. For a table of about 10,000 rows it could easily take 45 minutes or more. At least it is something I can kick off and let run. I only update the table once a month. If it were more often, it would be an issue.

Voila!

That’s it! Thanks to Python and Google Fusion Tables, I now have an interactive map of forum users. Not only is it useful to use interactively, it also lets me run geographic queries against it from Python, such as, “find me the 20 forum users with more than X posts who work within a 20 mile radius of this spot” which can be handy for doing local community outreach.