Last week, Alfresco sent a notice to its customers (link requires a support login) outlining some major changes coming with 6.0, the next major release of the software.

Last week, Alfresco sent a notice to its customers (link requires a support login) outlining some major changes coming with 6.0, the next major release of the software.

The general theme of these changes appears to be that of streamlining and simplifying the platform, both in terms of functionality it offers and in terms of how it is deployed and run.

I’m glad to see this happening–the platform is littered with good ideas that were implemented, iterated upon once or twice, and then abandoned (I’m looking at you, Web Quick Start). There is never a good time to make such significant changes to a shipping product, but it was probably past time to do this.

The high-level goals probably won’t surprise you. They can be summarized as: Better Integration, Containerization, and Enhanced REST API. The devil is in the details, and there are a few items that don’t fit neatly into those categories, so let me run-down each of the major items and give you my take.

Bye bye binary installers

Right off the bat, Alfresco says they are going to eliminate the binary installers and replace them with Docker containers. The idea is to make it easier to deploy across environments and data centers, whether those are on-premises or in the cloud.

My take: I like Docker and I know there are people running Alfresco in containers, but this isn’t going to be that interesting to most of my current clients, at least in the near-term, because they aren’t yet ready to use containers in production. Instead, it’s going to cause pain because we’re going to have to manually install Alfresco rather than use the binary installer. On the other hand, there are many things that need to be done for a production install that the binary installer does not cover, so the installer wasn’t really buying us much anyway.

Was nice knowing you, WAS

Alfresco is going to make the repository directly executable without the need for a separate servlet container. This means JBoss, WebSphere, and WebLogic will no longer be supported. The announcement says that support for running within Tomcat will also be dropped in the future, though it does not say when.

My take: I rarely see those “enterprise-y” app servers used any more, especially in the context of Alfresco. Most people run on Tomcat. But those running on Tomcat rarely run anything else besides Alfresco in the same Tomcat instance, so the value that Tomcat brings is pretty low. Making the repo directly executable nets out to a Good Thing.

Sayonara CIFS

It’s funny–CIFS is often the killer feature clients cite as the thing that made it possible for Alfresco to be their shared drive replacement. But it also seems to be a huge source of headaches. Alfresco says security vulnerabilities have made continuing with it untenable. Customers should switch to AOS for Windows clients and WebDAV for non-windows clients. AOS is shipped with Community Edition, but it is not open source.

My take: So many problems, so tough to debug. Good riddance. I will say that AOS has not been without its share of problems, and with AOS not being open source, we’re completely reliant upon Alfresco here.

So long Solr 1

Alfresco 6.0 will leverage Solr 6.0. Solr 1 is being dropped and Solr 4 is sticking around to help customers migrate to Solr 6.

My take: Alfresco has been playing catch-up with Solr for a long time, so anything that keeps it moving forward is good.

Disconnect the Share Connector

The Share Connector made it possible to integrate standalone Activiti (aka, “Process Services”) with Alfresco Share. This gave you the full power of a separate Activiti instance while letting users continue to use the document management and collaboration UI they were familiar with (Alfresco Share). Now Alfresco is essentially saying, if you need both, write it yourself using the ADF.

My take: Alfresco Share is essentially no longer being developed, so why continue to maintain a connector between Activiti (aka, “Process Services”) and Share? Agreed, it doesn’t make sense. And the more I look at the ADF, the more apparent it is that the true value of the framework is only realized if you are using both Process Services and Content Services. Otherwise, you end up stripping out a bunch of stuff you don’t need. But that’s another blog post.

Multi-Tenancy doesn’t live here anymore

Alfresco is dropping multi-tenancy support unless you have an OEM agreement.

My take: Ah, multi-tenancy. You think you want it until you see the list of what you have to give up to use it. It’s a feature even Alfresco didn’t use in their own cloud product. Now it’s official.

Deprecation Appreciation

Okay, those are the things that are going away. But the notice also included a list of deprecations. Web Quick Start, Alfresco in the Cloud, and Share Site Components will all be deprecated with Alfresco 6.0. All three are examples of good ideas that never really “made the turn”. I suspect Alfresco in the Cloud will prove to be the most problematic in terms of transition, depending on what Alfresco replaces it with.

Share Site Components means that Site Blogs, Site Calendars, Site Data Lists, Site Links, and Site Discussion Forums are going to be considered deprecated in the 6.0 release. Alfresco says if you need those features you should rely on integrating with other products or writing your own custom code.

Most projects I work on don’t use blogs, calendars, links, or discussions. I have a client or two that are using Alfresco as a team collaboration tool, but even then, the use of those tools is fairly sparse within the company. But lots of people use Data Lists, so that one may raise an eyebrow or two.

A Data List is just a collection of content-less objects of the same type. To Do Lists and Contact Lists are simple examples but people use Data Lists for all kinds of things. The Share UI doesn’t deal with content-less objects very well–you’re supposed to be collaborating on documents so when an object does not have a content stream it looks a little ugly. The Data List UI in Alfresco Share is an answer to that. But Alfresco is encouraging the creation of custom applications with the ADF (or without) so if you need something like a Data List, the repository still supports it–it’s just up to you to develop the UI.

Dropping these lesser-used tools is okay. It does seem weird that they are being dropped before Share is officially discontinued. Why not just let them stick around until Share is dead? I suspect Alfresco knows it will be a long, long time before they can officially kill Share, so they might as well trim what they’ve got to lessen the ongoing burden and to “purify” the platform of anything that’s not strictly about content.

Developer Direction

The notice closes out with some advice for people customizing the platform which can be summarized as: Use the platform as a black box. Rather than customizing Share, write a custom application that calls Alfresco’s services. Rather than integrating Alfresco with other systems “from the inside, out” by writing code that runs in the same process and leverages the foundational, native Alfresco API, put that logic in external applications that get what they need from Alfresco via REST. This is sound advice and I think many of us made that shift a while ago.

I should note that while Alfresco would like you to use ADF to build your custom content-centric applications, you’d be wise to assess that decision carefully. Depending on exactly what you are doing you may be better off without it.

There are some confusing statements about workflow. Here’s what the announcement says (emphasis is theirs): “In order to make it easier to design, deploy, and maintain custom workflows, in a future release we will be providing a platform-wide workflow service using Alfresco Process Services (powered by Activiti). This will replace the use of embedded Activiti for custom workflows. Future custom workflows will be implemented external to the Content Repository and will leverage the REST APIs of Alfresco Content Services.”

That sounds to me like they are removing embedded Activiti from the repository. However, in the next bullet, they say, “ACS workflows are intended to automate the management of content items within the Content Repository and APIs for custom workflows will continue to be available with subscriptions to Alfresco Content Services.” That sounds like there will still be a way to do workflows within Alfresco, but it isn’t clear whether or not that will still be Activiti or something else.

This was a long post, but, as you can see, they had a lot to announce. On the whole, I think they are positive, necessary changes. Small customers that are trying to use Alfresco for a lot of things, particularly collaboration, may feel some pain as they will be forced to look elsewhere for that kind of functionality. Very large customers, who often leverage Alfresco only for the repository and almost always have a custom front-end, may not be affected at all, and to the extent they already use containers, may benefit.

Regardless of customer size, we’ll all benefit from a more svelte platform that Alfresco and the community are better able to support.

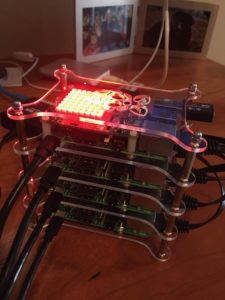

Photo Credit: Richard Summers, CC BY 2.0

I love virtual machines and containers because they make it easy to isolate the applications and dependencies I’m using for a particular project. Tools like Docker, Virtualbox, and vagrant are indispensable for most of my projects and I’m still using those, but in this post I’ll describe a product called Antsle which has given me additional flexibility and has freed up some local resources.

I love virtual machines and containers because they make it easy to isolate the applications and dependencies I’m using for a particular project. Tools like Docker, Virtualbox, and vagrant are indispensable for most of my projects and I’m still using those, but in this post I’ll describe a product called Antsle which has given me additional flexibility and has freed up some local resources. Encouraged by the success of the independently-organized, developer-focused BeeCon conference, and seeking to continue its renewed focus on developers,

Encouraged by the success of the independently-organized, developer-focused BeeCon conference, and seeking to continue its renewed focus on developers,