Alfresco DevCon is coming up, so I’ve been wondering about what kind of new and innovative things Alfresco might be sharing with us at the conference. That got me thinking about whether or not Alfresco is still innovating and if those innovations need to appeal to developers or business users for Alfresco to stay relevant. My opinion on that might surprise you. Let me explain.

Alfresco DevCon is coming up, so I’ve been wondering about what kind of new and innovative things Alfresco might be sharing with us at the conference. That got me thinking about whether or not Alfresco is still innovating and if those innovations need to appeal to developers or business users for Alfresco to stay relevant. My opinion on that might surprise you. Let me explain.

Back in 2010 I wrote a blog post called “Alfresco, NoSQL, and the future of ECM“. In that post I pointed out that NoSQL offered many features attractive to developers of content-centric solutions such as the lack of a schema, ease of replication, and their ability to scale. I predicted that new content management and document management vendors would enter the market with native NoSQL solutions, existing vendors would start to take advantage of NoSQL, and customers would develop their own content-centric solutions built on NoSQL instead of relational repositories.

It didn’t take long for all of these predictions to come true (not that they were much of a stretch!). New content management players like Contentful and CloudCMS arrived (See “The Emerging Content-as-a-service market“, 2014), both of which rely heavily on NoSQL stores.

Nuxeo, who Gartner named a visionary in the ECM space, now offers MongoDB instead of or along-side a relational database. Nuxeo claims to be the most performant content services platform on the market, due in large part to their move to a NoSQL back-end.

Alfresco never did anything serious around NoSQL but it is interesting to note that one of their partners did. Chicago-based Technology Services Group made a big investment in Hadoop back in 2015, essentially offering it as a back-end alternative to Documentum and Alfresco as part of their OpenContent offering. TSG has multiple clients on Hadoop including a not-for-profit, a pharmaceutical firm, and a nuclear power plant. According to TSG’s founder and president, Dave Giordano, his clients running the Hadoop-based repository couldn’t be happier. Now the firm has added Amazon’s DynamoDB as an additional back-end repository option.

TSG is providing Hadoop and Dynamo as back-end options for their business solutions. But what about something developers can take advantage of when building their own solutions? Some colleagues and I did some experimentation a couple of years ago around building a simple content repository using DynamoDB for metadata storage, Amazon S3 for object storage, and Lambda for the API and it worked pretty well.

Sometimes all you really need is a place to store digital objects and a place to manage metadata about those objects. You don’t need a full ECM platform installation to do that. When TSG sells OpenContent it is the solution they are selling–the back-end is just an implementation detail.

Which brings me back to that 2010 blog post. In addition to predictions about NoSQL eventually being a featured architectural component of content management systems, I also wondered what the rise of NoSQL meant for Alfresco:

“Where does that leave Alfresco? It seems their positioning as a developer-focused, “Internet-scale” repository ultimately leads to them competing directly against NOSQL repositories for certain types of applications.” — Jeff Potts, 2010

I actually worked at Alfresco around this time. Part of my job was to reach out to developers to convince them to build their solutions on top of Alfresco. The broader developer audience was not on board. A big reason is that those developers were already using things like MongoDB and CouchDB for JSON stores. These were much lighter, more flexible, and far more scalable. There is just no comparison between native JSON repositories and Alfresco by these measures.

Several years later, I still get inquiries from people that can be summarized as, “We’re thinking of building this custom solution that has nothing to do with managing office documents but does need an unstructured repository. Do you think Alfresco would be a good fit?”. The answer is usually no. This isn’t a knock on Alfresco–it’s just about purpose-of-fit. If you don’t need versioning, check-in/check-out, online editing, or transformations, why pay the overhead?

So, to answer the question from my past self about where that leaves Alfresco, it was never really a contest. Developers adopted technologies like MongoDB and others in droves. Rather than a light-but-scalable piece of infrastructure that devs routinely incorporate into larger solutions, Alfresco is a full-fledged platform–with all of the good and bad that entails–whose price tag and footprint demand serious justification before being implemented.

What this means for Alfresco today

Back when I wrote the NoSQL blog post, Alfresco thought its most likely entry point was via developers who needed a repository, grabbed Community Edition, and eventually converted into paying customers. But the very broad population of developers have other technologies–not Alfresco–top of mind when it comes to building custom applications. People are continuing to download Alfresco, but I think the “who” and “why” has shifted.

If you look at what Alfresco has done lately, the 6.0 and 6.1 releases are mostly about customization and deployment. The Application Developer Framework (ADF), the new Docker containers and Helm charts in 6.0, and SDK 4.0, which is heavily Docker-based, are all welcome additions.

Absolutely, the platform has to be easier to extend, customize, and deploy, so I’m glad to see that being addressed, but my customers don’t actually care as much about those things. There have been some great new end-user features added recently, such as the Search and Insights Engine and the Digital Workspace, but more are needed if Alfresco wants to reclaim its “visionary” status.

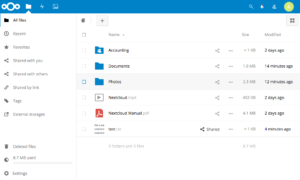

Alfresco is not in the “content repository” market. Developers can create a schema-less, scalable, replicated repository easily with NoSQL and other technologies. Scoff at the buzzwords if you want, but I think “Digital Business Platform” actually describes Alfresco really well. The key is that a “Digital Business Platform” isn’t for developers, although they need to extend and customize it. The platform is for business users.

At DevCon, we’re going to see a ton about ADF and Docker, and those topics are important to the DevCon audience. But my customers are looking for innovative, business-friendly features ready to use, out-of-the-box. It may sound strange coming from me, but those end-user innovations are what will keep Alfresco relevant and appealing to the market they are actually in.

Photo Credit: Mirror, by Vadzim Vinakur, CC BY-NC 2.0

Friday, May 25, 2018 was an auspicious day. You may remember it as the crescendo in the wave of GDPR-compliance related email notifications because that was the day everyone was supposed to have their GDPR act together. For those of us in the Alfresco Community, it was also the day that the Add-ons Directory died (or, more correctly, was killed). In fact, the two are directly related. More on that in a minute.

Friday, May 25, 2018 was an auspicious day. You may remember it as the crescendo in the wave of GDPR-compliance related email notifications because that was the day everyone was supposed to have their GDPR act together. For those of us in the Alfresco Community, it was also the day that the Add-ons Directory died (or, more correctly, was killed). In fact, the two are directly related. More on that in a minute. Alfresco Software, Inc. has announced that

Alfresco Software, Inc. has announced that