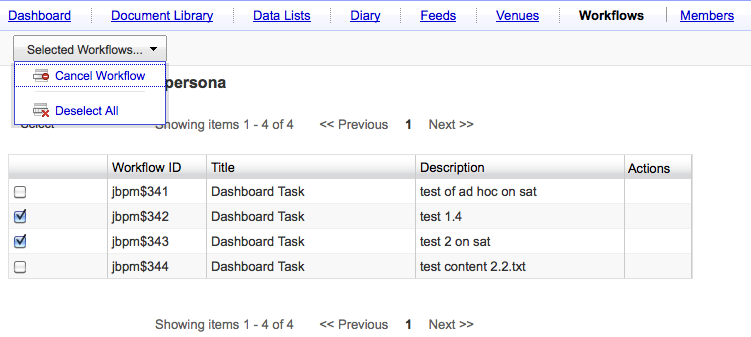

This is the second part of a two-part post on some recent work Metaversant did with Alfresco workflows. The first part was a post on Workflow Reporting. It outlined a high-level recipe for creating a workflow dashboard in Share that showed a list of workflows started for a specific Share site and allowed bulk actions (such as “cancel”). In this post, we’ll look at Folder Monitoring which deals with how to automatically start an Alfresco jBPM workflow when a document is dropped into a folder.

The easy answer

The embedded jBPM workflow engine that’s embedded in Alfresco is flexible and powerful. Creating a new process and wiring it into the Alfresco Explorer or Alfresco Share user interface is straightforward once you’ve done it a time or two. If you need a refresher on the details, see “Get your Alfresco ‘flow on“. For the purposes of this discussion, just know that your process definition can have process variables which you can read and set from the user interface and from code within the process definition.

It’s actually really easy to start a workflow when objects are created or updated in a folder. That’s because the Alfresco JavaScript API can run the “start-workflow” action, and Alfresco JavaScript can be invoked from a rule that runs when something is updated in a folder. The JavaScript to start a workflow using this approach looks like this:

var startWorkflowAction = actions.create("start-workflow");

startWorkflowAction.parameters.workflowName = "jbpm$wf:adhoc";

startWorkflowAction.parameters["bpm:assignee"] = assignee;

startWorkflowAction.parameters["bpm:workflowDescription"] = description;

startWorkflowAction.execute(document);

The catch is that when you use that approach, the developer writing the JavaScript has to know at design-time what values to provide to the workflow when it starts up (in this case, the assignee and the description). Ordinarily, when a workflow is manually invoked, the end-user starting the workflow is there to provide those values. If your particular workflow doesn’t require any variables to start, or the variables are known at design-time, the simple rule-invokes-JavaScript approach will work. Otherwise, something more is required.

What we needed for this client was not only the ability to start a workflow when a new object landed in a folder, but also the ability for an end-user to specify parameters for that workflow ahead of time. When discussing the functionality we called it “precompiled workflows” and “workflow templates”, which is pretty descriptive of what we needed. From the user’s perspective, we needed one user (let’s call her a Manager) to be able to say, “When an object is created in this folder, launch this specific workflow with these parameters”. When other users (or systems) create objects in that folder, the workflow needs to launch automatically without further input.

Options considered

As usual, there are a few different ways to go about this. One is the rule-invokes-JavaScript approach discussed earlier. The problem with this is that the parameters have to be specified in the JavaScript and that won’t work for non-technical end-users. This option was discarded early.

The next option we considered was creating a custom action. This almost gets us there. The Manager user can create a rule on a folder and select the custom action. The form service (Share was the main UI in this case) can be configured to let the Manager set the parameters to use when launching the workflow. Non-Manager users can then create objects and workflows will launch automatically.

The problem with this approach is that the list of parameters is not finite–this client had big plans for the workflow engine and they did not want to modify the custom workflow launching action every time they created a workflow that had a new parameter. Similar to the first option, this approach has worked well in the past, but it didn’t fit for this client, so we moved on.

The third option we looked at was a variation on the second. Rather than having the action handler responsible for grabbing parameter values, this approach uses the workflow model itself to persist a “workflow configuration” that the action can point to. The Manager would create a workflow configuration, then configure the rule to say, “When documents are added to this folder, start this workflow with this configuration”.

The workflow configuration could be a custom object. Briefly, we considered actually persisting objects that correspond to the types defined in the workflow model (normally, those aren’t saved anywhere), and I think if we ever revist this option, we may look at that again.

The problem with this approach was that the Manager has to think too much. Am I creating a workflow that’s going to launch other workflows? Okay, then I need to create a “workflow configuration” and configure a rule. Am I just routing something through a workflow? Okay, then I need to use “start workflow” like I normally would. That’s way too confusing. Next!

Implementation

Ultimately, we decided that the easiest route from both an implementation perspective and an end-user perspective was to rely on the business process to be smart enough to tell the difference between a package with a folder and a package with documents. When the workflow is run on a folder, it can iterate over the children of the folder, spawning new workflows and copying its process variables into the newly started workflows. It can then transition to a wait state until something tells it to go check for new children in the folder. When the same workflow is run on a document however, it proceeds down the “non-folder” route and performs the work it normally would.

Using this approach, every workflow is able to run as both a “folder monitor” and a “worker”. That way, when a Manager starts a workflow, she doesn’t have to think about whether she’s starting a “monitor” workflow or a one-time workflow, she just starts the workflow as normal and sets the parameters. The business process does the work of spawning additional workflows when it needs to and passes those parameters along. Now we’re talking!

High-level Recipe

Similar to my previous post on workflow dashboards, I may make this source available at some point, but until then, here are the high-level steps to do it yourself.

Create the jBPM process definition

This will work with any process definition, so I won’t describe anything business-specific here. Instead, I’ll talk about what you would add to your process definition to make this work.

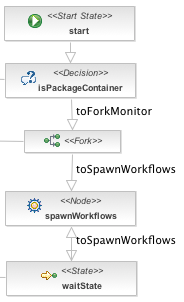

The first thing the business process needs to do is make a decision: Am I running against a folder or a document? If the package contains documents, the workflow continues as it normally would. If, however, it contains a folder, the workflow iterates over the folder’s children and starts a new workflow instance for every child in the package, copying its process variables into the new workflows.

The decision is implemented as JavaScript. If we find one folder, we take the folder route. In this case, the users are only going to run the workflow on one folder at-a-time so we don’t have to worry about what to do if the workflow package contains a mix of documents and folders. The decision JavaScript looks like this:

var flag = false;

for (var i = 0; i < bpm_package.children.length; i++) {

if (bpm_package.children[i].isContainer) {

flag = true;

break;

}

}

isContainer = flag;And the transitions for the decision look like this:

<transition to="forkWork" name="toForkWork"></transition>

<transition to="forkMonitor" name="toForkMonitor">

<condition>#{isContainer == true}</condition>

</transition>The forkMonitor node is a fork that creates a workflow dashboard task (see previous post) and simultaneously transitions to the spawnWorkflows node. The spawn workflows code is a little too lengthy to include here, but what it does is:

- Grabs the parameters it needs to pass in to each of the newly started workflows

- For each document in the workflow package…

- Checks to make sure the document hasn’t already been processed (more on that later)

- Checks to make sure the document isn’t already in a workflow

- Starts the workflow using the out-of-the-box start-workflow action (see the code at the start of the article)

Once the workflows are spawned, the business process transitions to a wait state where the process sits indefinitely until it is told to check the folder for new children.

Create a custom action that will tell the process definition to check for children

How does the workflow know when new children have been added to the folder? I’m so glad you asked. After the workflow spawns new workflows for the children, it transitions to a wait state. To trigger the workflow to move off the wait state, we used a custom rule action. The rule action is set high enough up the folder hierachy that end-users don’t have to worry about it–it automatically inherits onto the folders created below it. The rule action is Java-based, and it takes two parameters: The name of a node and the name of a transition to take.

When a new document is added to a folder, the rule triggers the action. The action grabs the node’s parent and checks to see if it is involved in a workflow. If it is, it tries to find the workflow node named in the parameter, which will be the wait state mentioned in the previous step. If it finds that, it signals the node to take the specified transition. The transition will be to the “spawn workflows” node.

The combination of the wait state and the spawn workflows node in the business process with the custom rule action that signals the wait state creates a cool little “interrupt” loop: The process spawns workflows for folder children, then waits until more children arrive, then spawns workflows for those children, and so on until someone kills the workflow.

The combination of the wait state and the spawn workflows node in the business process with the custom rule action that signals the wait state creates a cool little “interrupt” loop: The process spawns workflows for folder children, then waits until more children arrive, then spawns workflows for those children, and so on until someone kills the workflow.

Customize the Share user interface to allow workflows to start on folders

Since the dawn of time Alfresco’s UI has not allowed workflows to start on folders, but the workflow engine can handle it. For this approach to work we most definitely have to be able to start workflows on folders. That’s a pretty simple little config tweak to make that happen in Share–just copy the existing assign workflow action link from the document actionSet to the folder actionSet in documentlist.get.config.xml (and document-actions.get.config.xml), like this:

<actionSet id="folder">

...

<action type="action-link" id="onActionAssignWorkflow" permission="permissions" label="actions.document.assign-workflow" />

...

</actionSet>Voila! Now you can run workflows against folders.

Create a “workflow status” aspect to avoid processing docs more than once

The last step is to make sure that documents only run through the process once. Otherwise, every time the process spawns workflows for children in a folder, he’ll start one up for every child in the folder, regardless of whether or not its been through the workflow already. This may be what you want, but this particular client wanted docs to run through the process only once.

To do this, we created a simple aspect with a “workflow status” property. In the last node of the process, the property gets set. When the spawn workflows code runs, it filters out folder children that have the status set.

That’s it!

This approach puts the burden on the workflow designer to use some standard node names and logic in their process definitions. And, it will result in many in-flight workflows (at least one for every folder being monitored), although that shouldn’t be a big deal from a performance perspective (running workflows really aren’t “running”).

The important thing for this client is that it provides a nice way for users to essentially “pre-configure” workflows so that subsequent users can start workflows simply by adding documents to a folder, all without anyone having to learn any new “workflow configuration” constructs. And, workflow designers can easily make their workflows “folder aware” or “templatable”, depending on how you want to look at it, all within the process definition, without having to recompile any custom actions or tweak JavaScript.